What’s the point in building a load of clean, modular notebooks if you have to kick them all off individually whilst having to remember which order everything needs to run?

This is where orchestration comes in: building ETL Pipelines which run tasks – notebooks, stored procedures, dataflows etc. – in the right order with configurable settings. It is the key to automation.

You can go back to the case study here.

Orchestrating the Workflow

We have built all our code in modular steps for flexibility and maintainability.

We now need to recreate the order in which we want to run our notebooks. In the future, we may evolve this project to use Databricks’s Spark Declarative Pipelines, or substitute steps for materialised lake views or dataflows. For now, we have kept it simple with solely notebooks of code.

In Databricks, the tool for orchestration is ‘Jobs’ (formerly Workflows). In Fabric, you would build a ‘pipeline’.

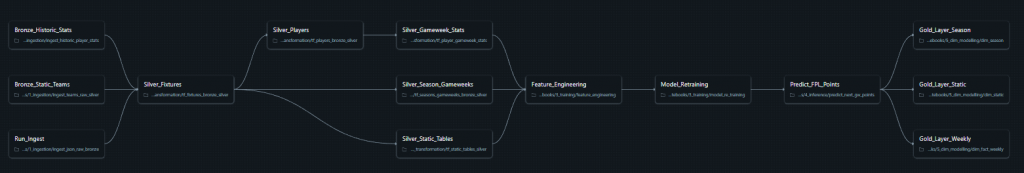

We have built 2 Jobs for this project –

- Historic pipeline (pictured) – a full rebuild of the workspace, such as for initialising the production environment.

- Incremental pipeline – the necessary notebooks needed to update the data after each gameweek.

Parameters & Configurable Settings

Parameters are what make orchestration flexible. Instead of hardcoding everything, we pass values that control how the pipeline behaves. In our case, we’ve got two job-level parameters:

- Environment (e.g., dev, prod)

- Protocol (HIST or INCR)

The same notebooks run, but the entire behaviour changes based on these values. That’s the power of parameters.

In Databricks, we grab parameters using dbutils.widgets.get(). In Fabric, you use parameter cells in the notebook.

Parameters can be:

- Fixed – like ours (env and protocol)

- Dynamic – current date, pipeline run ID, or context-aware values

- Scoped – job-level (applies to all tasks) or task-level (specific to one step)

This flexibility means you can run the same pipeline for multiple scenarios without rewriting a line of code.

There are lots of other configurable settings, such as cluster types, retries and alerting, which make the pipeline more resilient and production-ready.

You can add logic like conditional steps (run this only if X is true), loops (for each item in a list), and dependencies so tasks only start when the previous one succeeds or fails. This is where pipelines start to feel like real workflows rather than a simple script chain.

Initialising Production Workspace

Now that we’ve built both the historic and incremental pipelines, it’s time to go live. We switch the job parameters to prod and HIST and run the historic pipeline to populate the production schemas.

Up to now, the raw layer only had data up to Gameweek 8. After the historic run, we can kick off the incremental pipeline and upload the next gameweeks – we’re currently at GW21. At this point, the system is live with predicted FPL points, plus all team and player stats up to the most recent gameweek.

From here, on a gameweekly basis, we can grab the latest gameweek data from the FPL API, upload it, and run the incremental pipeline. In the future, I’d like to add a watermark mechanism that knows when the next gameweek ends and automatically triggers the pipeline (say, 12 hours later). That would make the workflow fully automated, but it’s out of scope for this MVP.

We’re nearly there. We now have a machine learning model predicting FPL points and a dimensional model running in production. We are now ready to use all this data to visualise and gain some insights!

BW