What is Machine Learning?

Everyone wants “AI”. Most people have no idea what it actually is or why they need it. AI isn’t magic, it’s a tool. Using it properly matters more than forcing LLMs and agent workflows into a business that still has someone typing numbers into Excel. To get the best out of applying AI and ML, we need to understand the foundations of what it is and how it works.

Here’s how Copilot defines them:

- Artificial Intelligence (AI) as The field of computer science focused on creating systems that can perform tasks that typically require human intelligence, such as reasoning, learning, and problem-solving.

- Machine Learning (ML) as A subset of AI that enables systems to learn patterns and improve performance from data without being explicitly programmed.

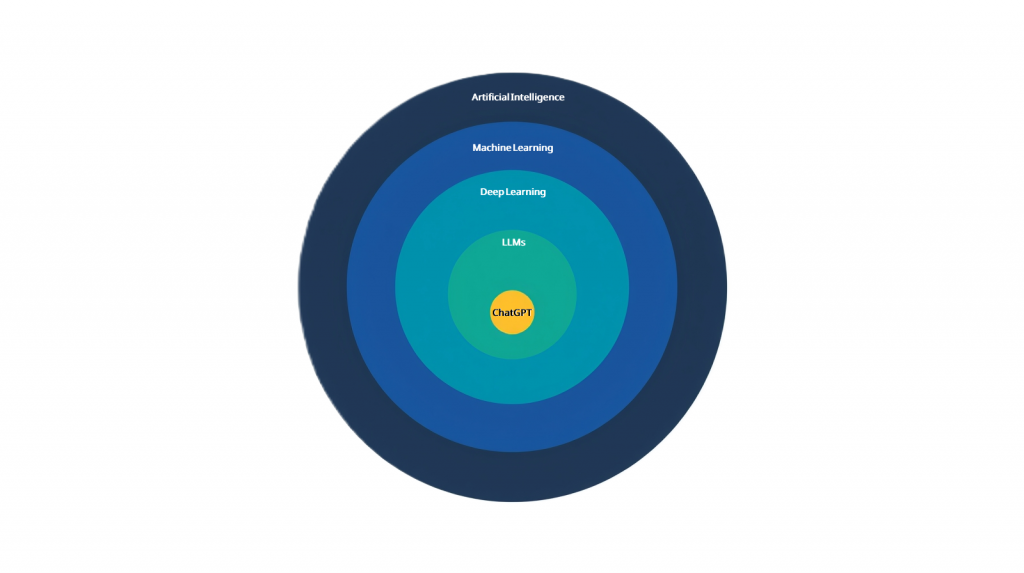

Broad, isn’t it? That’s why people get confused. AI and ML can mean almost anything in computer science that automates, predicts or improves performance. This graphic highlights that a tool such as ‘ChatGPT’ is a tiny subset of the whole field of AI/ML.

This blog is a very brief overview of a few concepts in ML – I’m sure there will be many more blogs on this in the future! It should setup the next blog where we apply it to the FPL project.

What’s important to understand is that there are so many flavours, use cases and techniques in ML and they don’t all solve the same problem.

Just “plugging the data in” to something without understanding what it is or how to improve/measure/assess it is a very bad way of problem solving!

Statistics Underpin Machine Learning

At its core, ML is statistics on steroids. Every algorithm, whether it’s for predicting football scores or recognising images, has the same goal: measure how well the algorithm performs and improve that performance over many iterations. The difference between a human and a machine is compute power. Machines can crunch millions of calculations in seconds, something no analyst with a spreadsheet can match.

There are hundreds of algorithms, each specialised for certain tasks. In my FPL project, we’ll use XGBoost, a powerful implementation of gradient boosting that combines lots of weak models to make a strong one. For my Masters dissertation, I worked on Neural Networks, which mimic how the brain processes information. Different tools, same principle: use data, apply maths, and iterate until the model gets better.

Models

I’ve used the word “model” a few times, so let’s clear that up. A model is the result of training an algorithm on data. Training means feeding the algorithm examples, letting it learn patterns, and adjusting until it predicts well enough. Once trained, the model is what you use to make predictions.

To train a model, you give the algorithm all your data, split into two sets: train and test, often 80/20 (simplifying here, as you can also have a third validation set). It learns from the training set, then validates and measures performance on the test set. This simulates real-world conditions and checks how well the model handles unseen data. It’s critical for avoiding underfitting (too simple, poor accuracy) or overfitting (too complex, great on training data but useless in production).

Models aren’t static. You can retrain them with new data to keep them accurate. That’s why storing them properly matters. Platforms like Microsoft Fabric and Databricks have registries where you can save models, version them, and manage their lifecycle. This makes it easy to deploy the right model or roll back if something breaks.

One tool worth knowing is MLflow. It tracks experiments, logs metrics, and handles model packaging. In short, it keeps your machine learning process organised so you’re not juggling random files and wondering which version actually works. Tools like MLflow ensure proper MLOps is followed to ensure integrity of the data and governance over time.

Feature Engineering

Feature engineering is one of the most important steps in building a good model. In simple terms, it’s about turning raw data into something the algorithm can actually use. Models work with numbers, so if you have categorical data like player positions or team names, you need to convert them into a numerical format. One common way is one-hot encoding, which creates separate columns for each category and marks them with 1s and 0s. For the FPL project, most of the stats we have are purely numerical, so we don’t need to encode a lot – but worth noting.

The goal is to create a single dataset, usually one big table, with as many useful columns (features) as possible. These features give the algorithm the best chance of figuring out what matters when making predictions. In my FPL project, we will engineer features from player and team stats to predict total points. The model then assigns weights to each feature based on how important it is. Over time, this process builds a model that understands which factors drive the outcome.

For example, stats like minutes played and expected goals are likely weighted heavily in our model. The more a player is on the pitch and the higher their expected goals, the greater their chance of scoring FPL points. Compare that to someone sat on the bench who never gets a meaningful chance. Statistics such as average penalties missed per game may not be weighted as heavily – but who knows, the machine might uncover a hidden pattern and spark the next wave of football tactics!

Inference – Using Models in the Real World

Once a model is trained and stored, the next step is using it to make predictions. This is called inference. The idea is simple: you build a new dataset that the model has never seen before, using the same features you used during training. The model then takes this unseen data and produces an output based on what it learned.

For example, in my FPL project, after training the model on historical player stats, we can feed it the latest gameweek data and ask it to predict total points for the upcoming fixtures. The model applies the weights it learned during training and gives its best guess on the expected points in the next fixture. This is where machine learning becomes useful in practice – turning patterns in past data into predictions about the future.

Hyperparameter Tuning

Every algorithm comes with settings called hyperparameters. These control how the algorithm behaves, like how deep a ‘tree’ can grow in XGBoost or how many layers a Neural Network uses. Choosing the right combination can make a huge difference to performance.

Hyperparameter tuning is the process of testing different combinations to find the best setup. You train and test multiple versions of the model, compare the results, and pick the one that performs best. Once you’ve nailed the optimal hyperparameters, you retrain the model using that configuration so it’s ready for real-world predictions.

Now that we’ve covered the foundations of machine learning, it’s time to put theory into practice. In the next part, we’ll apply these concepts to the FPL project and show how a real-world example brings everything together – from feature engineering to inference and tuning – to predict player performance.

BW

You can go back to the case study here.